By M.GIERCZAK and P. BUDZEWSKI (Predictive Solutions)

OVER THE YEARS, IBM SPSS MODELER® (FORMERLY CLEMENTINE) EARNED THE REPUTATION OF BEING THE MODEL OPEN ENVIRONMENT ACROSS DATA MINING PLATFORMS.

Its creators were conscious of two things: that they should not create a software program in a vacuum for its own sake, and that future needs and trends could not be precisely defined. This need for openness was a key driver in the early development of the Clementine data mining platform resulting in notable features such as:

- The automatic generation of SQL code by the Clementine application (as opposed to SQL that was hard-coded by the analyst), allowing for fast communication with databases.

- The CEMI interface which opened up the possibility to use additional data mining algorithms, e.g. libraries written in C, developed by third parties.

- The Solution Publisher technology which provided a scoring mechanism that worked independently of the Clementine application environment.

Today, we have tried to reflect this same level of openness in PS CLEMENTINE PRO, a solution built on IBM SPSS Modeler, with a particular focus on integration with R and Python. In this post, we will focus on Python and describe the benefits derived from using it. Whilst Python co-exists with the earlier script language version (the so-called legacy script, or “LS” for short), it has a significantly broader set of capabilities for interaction with the IT environment.

During PS CLEMENTINE PRO program installation a dedicated modeler.api library is installed. This library allows us to control objects in streams and supernodes as well as create scripts managing multiple streams (session scripts). Whilst this can be achieved using LS, Python can do more. Let’s look at the first example to see some differences.

PYTHON AS A SCRIPT LANGUAGE

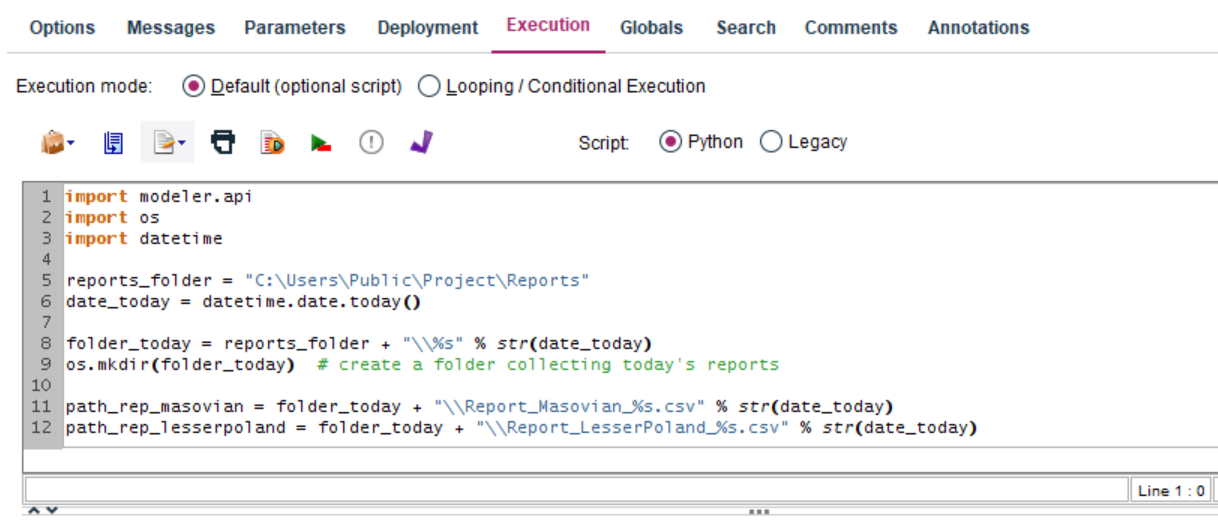

One of features of Python is the possibility to use numerous libraries. For example, the os library provides access to files and folders on the computer’s hard disk. Thus, from the PS CLEMENTINE PRO level we can not only define access paths to files (also achieved using LS), but also create folders, collect file information, remove files or change their names. The datetime library is a useful tool for automatically saving reports, in our case reports from each day will be included in separate, automatically created folders. Below is a sample of the code used to create a subfolder with the name being today’s date (lines 1-9).

Figure 1. Folder creating script with the use of the os module

ACCELERATING YOU WORK

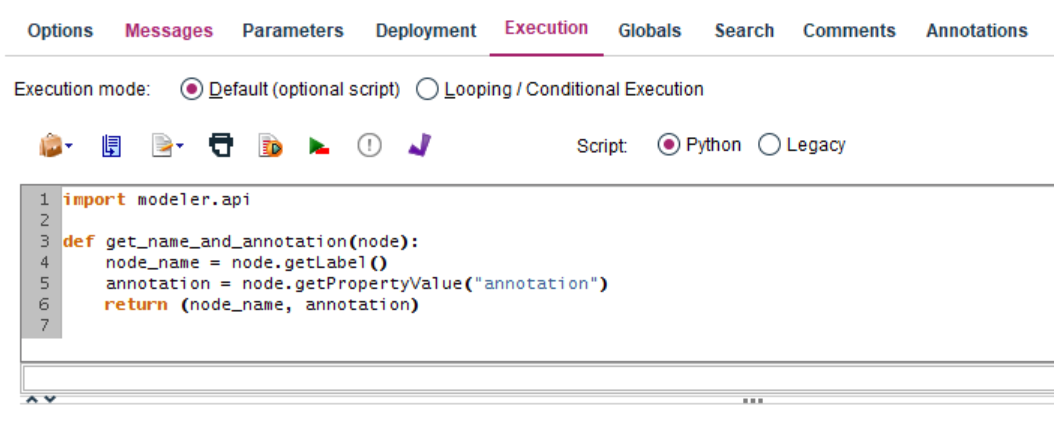

Python also allows the creation of your own functions, which can greatly simplify your work. This is very useful for handling complicated operations, loops or conditional instructions, and will not only shorten the working time, but will also shorten the code itself, improving its readability and reducing errors.

Figure 2. Python sample customized function

NEW ALGORITHMS

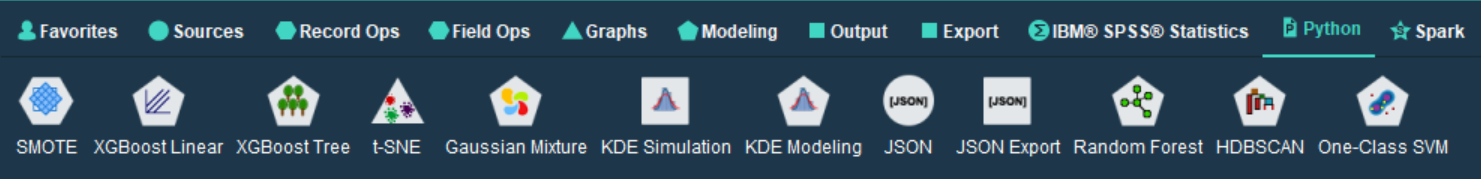

Python in PS CLEMENTINE PRO is used not only in stream scripts, but also by some nodes. We can find them on the node palette in the Python tab.

Figure 3. Python tab on the node palette

EXTENSIONS

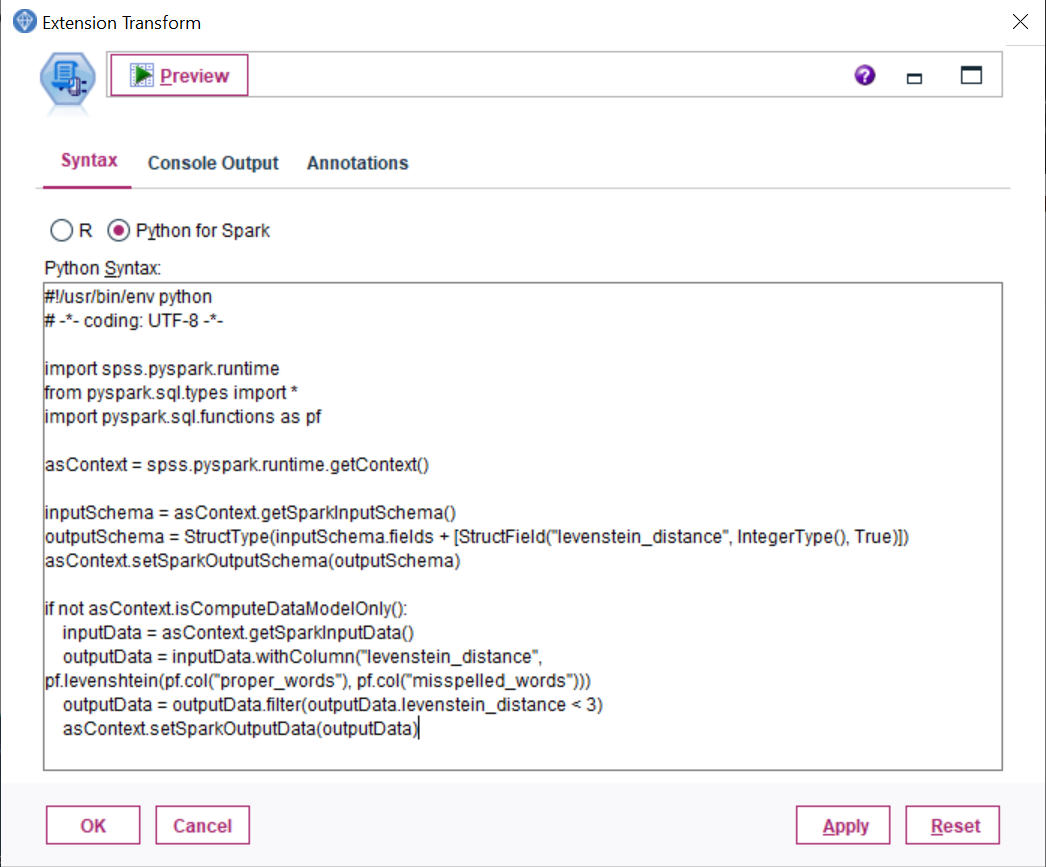

For more advanced users, PS CLEMENTINE PRO offers exceptional functionalities included in extension nodes. These are the nodes inside which it is possible to enter code in the R language or in PySpark framework, namely Python API, to Spark – a programming environment for distributed calculations.

Figure 4. Extension nodes

These nodes correspond to the types of PS CLEMENTINE PRO nodes, therefore, here we have data import, record and variable processing, data modeling and export nodes. With them, we can e.g.:

- download data from a url address (and other sources handled by Python),

- load any number of files at once and flexibly combine them inside the same node,

- create variables in a new way – utilizing PySpark functions

- edit previous variables with the use of Python functions,

- create and assess models unavailable in basic Modeler nodes,

- create our own versions of Python algorithm interfaces with any options we recognize as important,

- generate results in the text and visual form,

- format and export data e.g. to the JSON file format

NOTE: The use of these functions requires at least basic knowledge about PySpark.

Figure 5. Sample code in PySpark framework

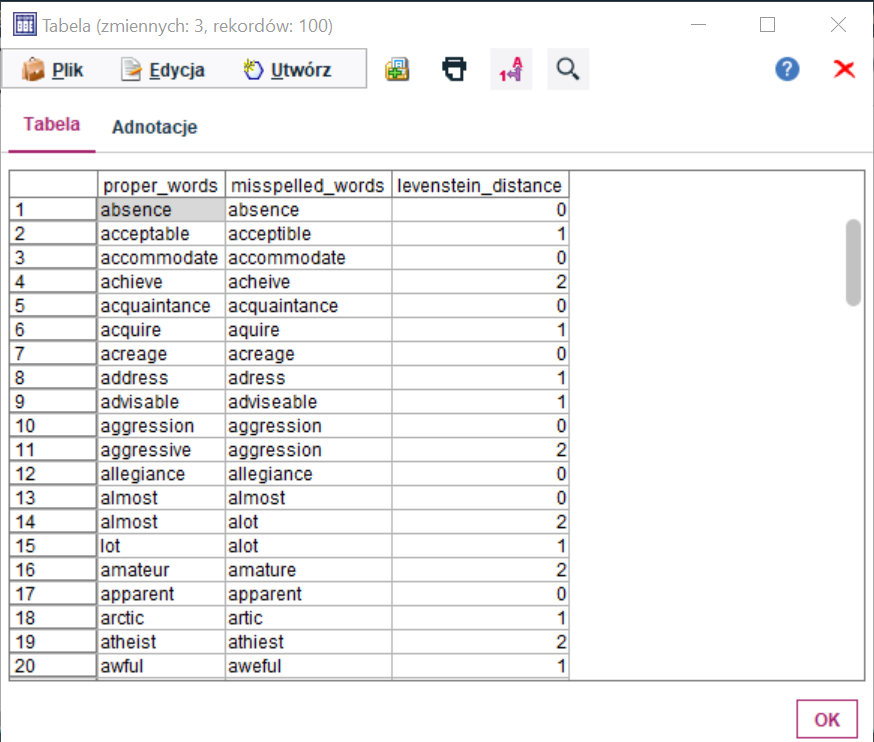

Figure 6. Result from the Table node, containing the calculated Levenstein distance

Extension nodes allow use of different Python libraries. Therefore, nothing prevents us from using in our stream NumPy, pandas, Matplotlib, Seaborn or Scikit-learn. This gives us a very broad set of possibilities both in the context of data modeling as well as processing.

Using the Transformation Extension node it is also possible to use complex regular expressions using the re module. These in turn may support text mining studies, a broad topic which warrants a separate discussion.